Pushing Logs to S3

Review this summary of some common third-party tools that are used by ChaosSearch users to push logs to AWS S3.

Supported Tools:

NOTEThis topic contains examples that could be helpful suggestions for your environment. Review these examples carefully because some configuration and special cases might be required to meet your specific use-case. Links to documentation are provided before each example.

Logstash

Logstash is an application for ingesting and transforming content from multiple sources before sending it to your storage location. More information is available at:

https://www.elastic.co/guide/en/logstash/current/plugins-outputs-s3.html

A sample Logstash configuration file for S3 storage and a ChaosSearch implementation follows:

input{

file{

path => "/Users/chaossearch/*"

start_position => beginning

}

}

filter{

dissect { mapping => { "message" => "%{} %{} %{} %{y} %{} %{} %{}]:%{restOfLine}" } }

json { source => "restOfLine" }

mutate {

remove_field => [ "message", "restOfLine" ]

}

}

output{

stdout { codec => rubydebug }

s3{

access_key_id => "ACCESS-KEY-HERE"

secret_access_key => "SECRET-ACCESS-KEY-HERE"

region => "us-east-1"

bucket => "S3-BUCKET-HERE"

size_file => 2048

time_file => 3

codec => json_lines

canned_acl => "private"

temporary_directory => "C:\\Users\\Administrator\\s3tmp"

}

}FluentD

FluentD is an open-source data collector that can help to unify data collection and consumption. It attempts to unify the file formats by structuring data in JSON format as much as possible. More information is available at:

https://docs.fluentd.org/output/s3

A sample FluentD configuration file for S3 storage and a ChaosSearch implementation follows:

<match pattern>

@type s3

aws_key_id YOUR_AWS_KEY_ID

aws_sec_key YOUR_AWS_SECRET_KEY

s3_bucket YOUR_S3_BUCKET_NAME

s3_region ap-northeast-1

path logs/

# if you want to use ${tag} or %Y/%m/%d/ like syntax in path / s3_object_key_format,

# need to specify tag for ${tag} and time for %Y/%m/%d in <buffer> argument.

<buffer tag,time>

@type file

path /var/log/fluent/s3

timekey 3600 # 1 hour partition

timekey_wait 10m

timekey_use_utc true # use utc

chunk_limit_size 256m

</buffer>

</match>Fluent Bit

Fluent Bit is an alternative to FluentD and Logstash for log shipping, and offers high performance with low resource consumption. Fluent Bit is designed and built on top of the FluentD architecture and general design. More information about how Fluent Bit can send content to S3 is available at Amazon S3.

More information about how AWS credentials are fetched can be found in the Fluent Bit documentation on GitHub.

A sample Fluent Bit configuration file for S3 storage and a ChaosSearch implementation follows:

[OUTPUT]

Name s3

Match *

bucket YOUR_S3_BUCKET_NAME

region us-east-1

store_dir /home/ec2-user/buffer

s3_key_format /fluent-bit-logs/$TAG/%Y/%m/%d/%H

total_file_size 50M

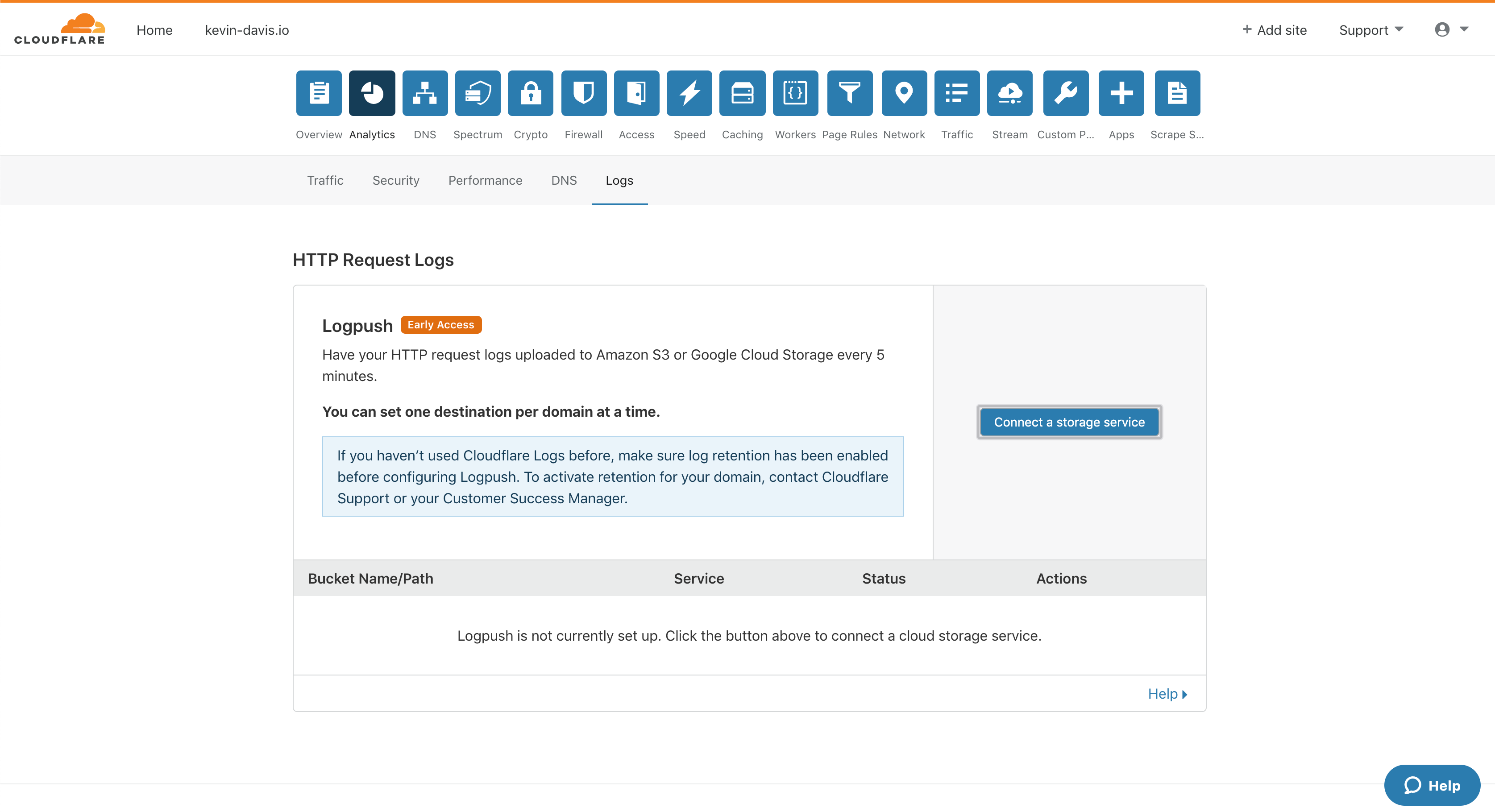

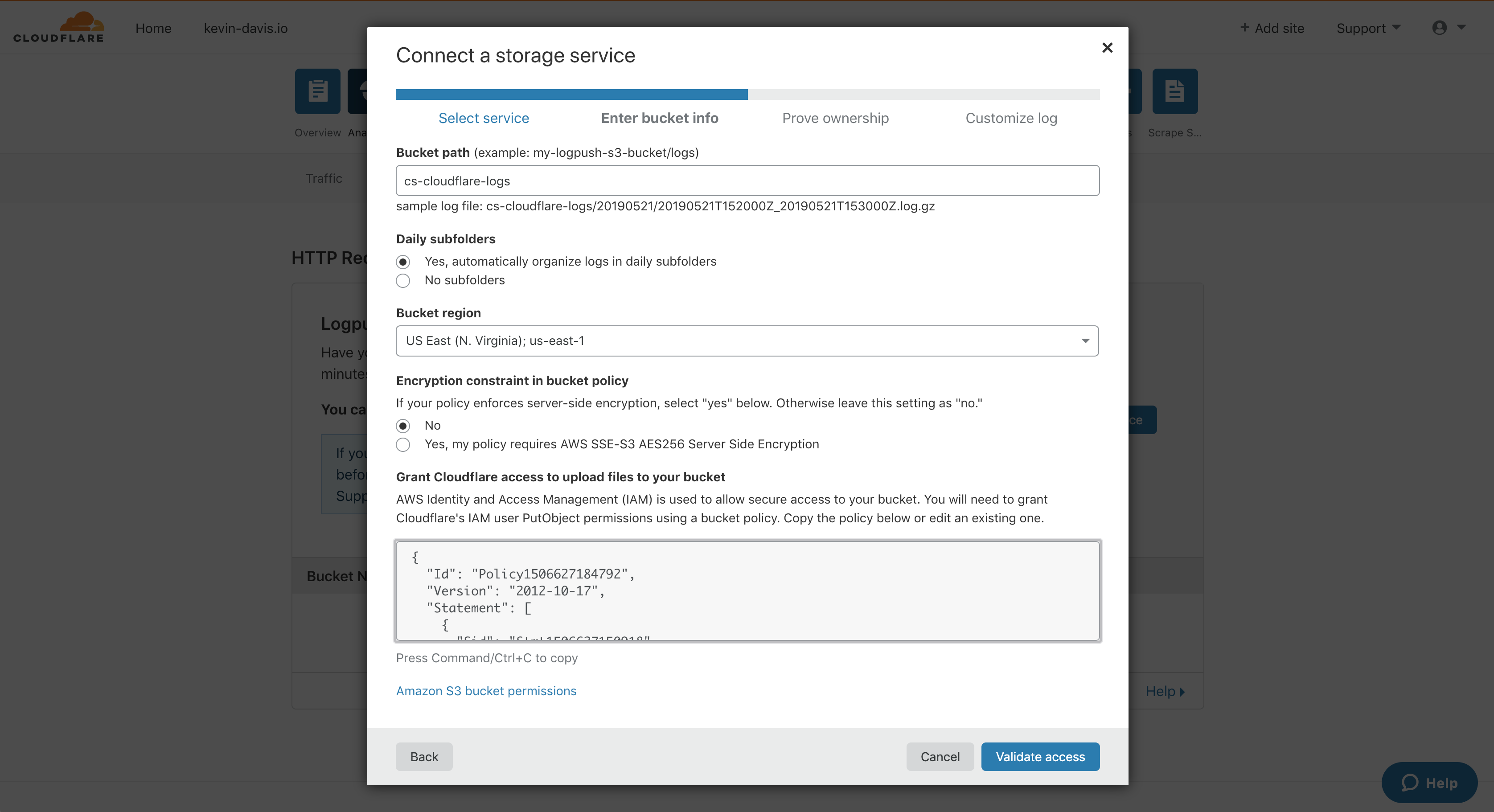

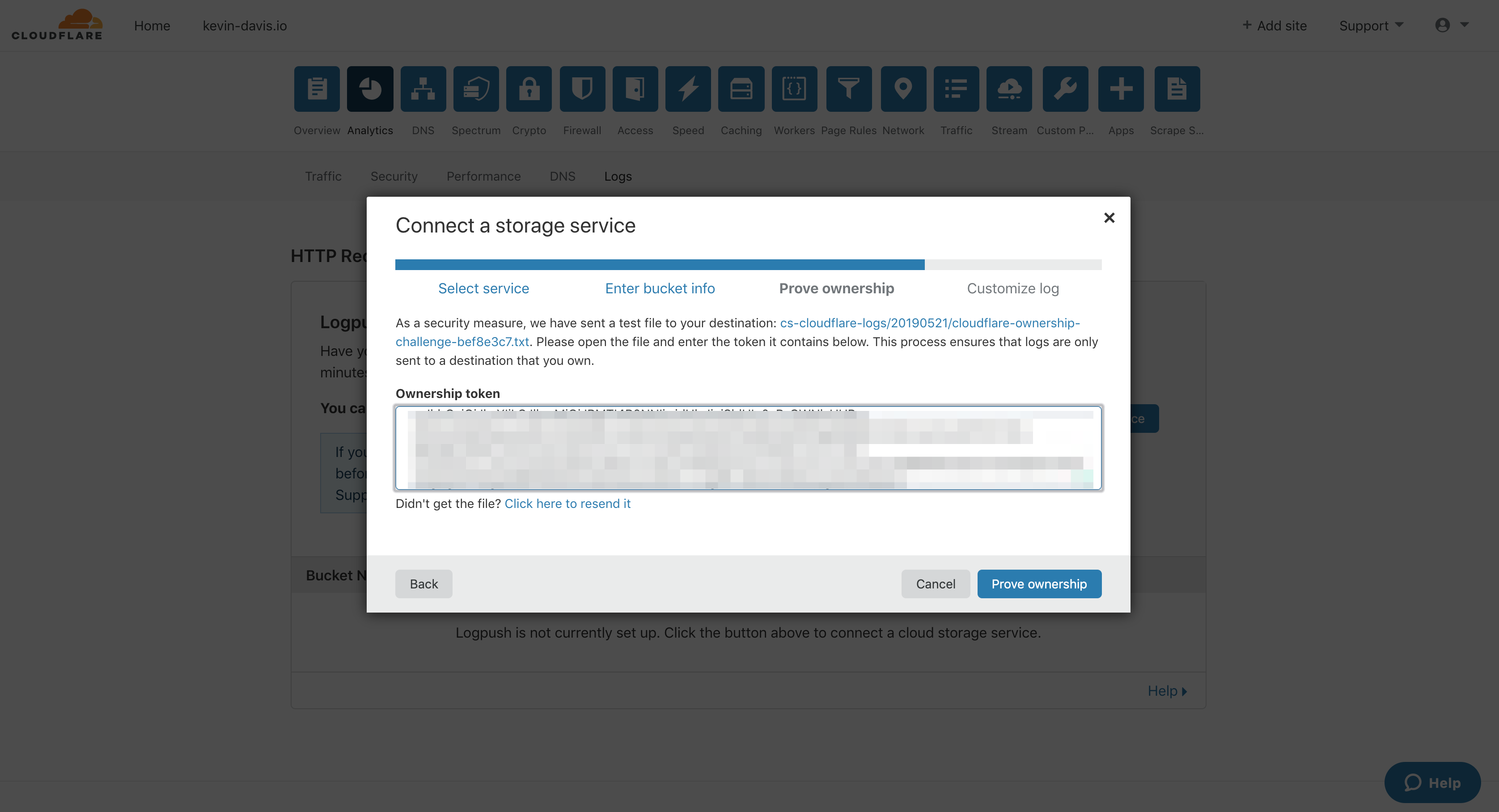

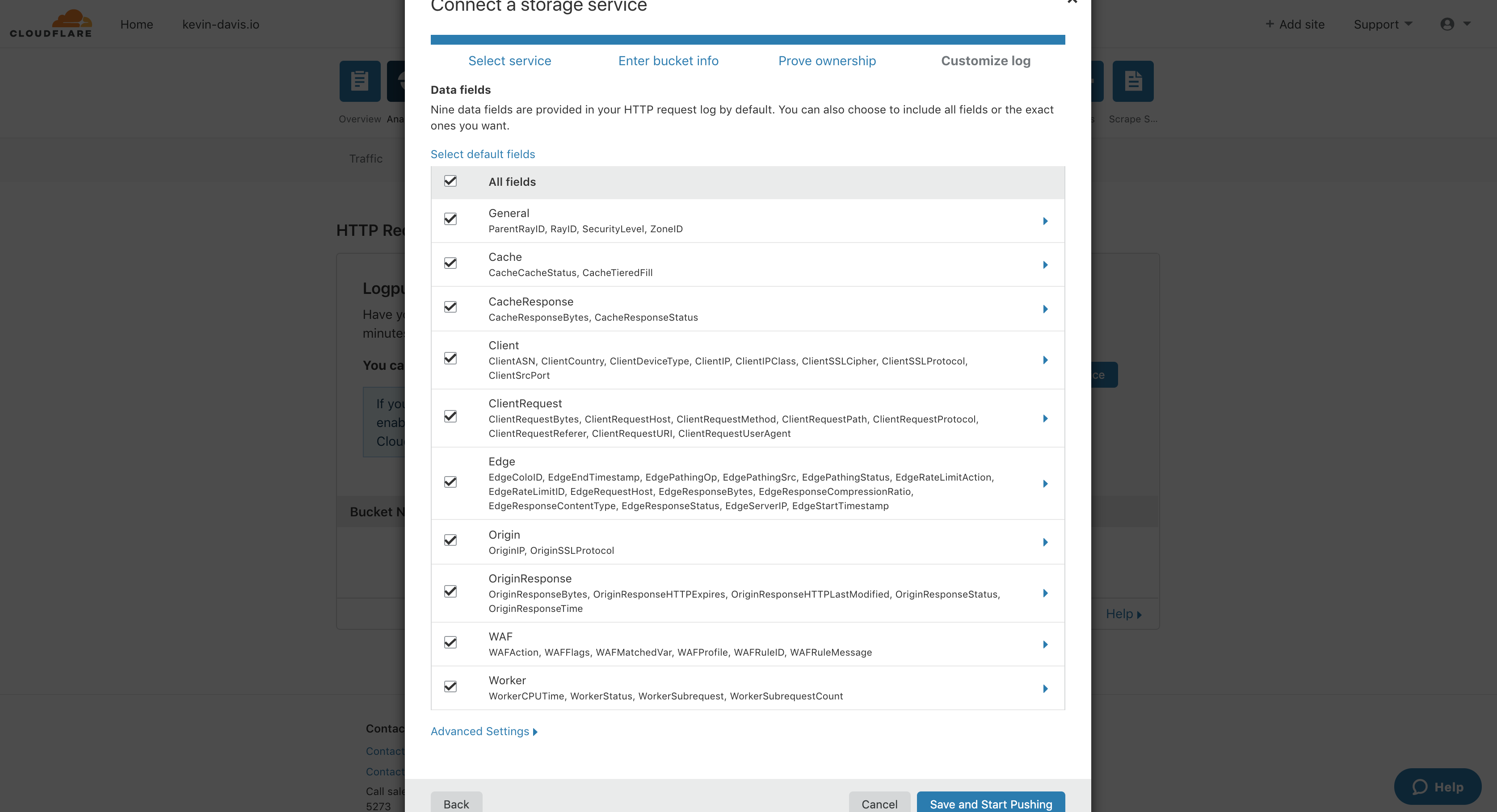

upload_timeout 10mCloudflare Logs via LogPusher

Cloudflare Enterprise provides very detailed logs to customers for all types of troubleshooting and investigation. Typically Cloudflare Enterprise users push their request or event logs to their cloud service storage using Logpush, which can be configured from the Cloudflare dashboard or API. More information is available at:

https://developers.cloudflare.com/logs/

Sample configuration screens for a Cloudflare Logpush setup for S3 storage and a ChaosSearch implementation follow:

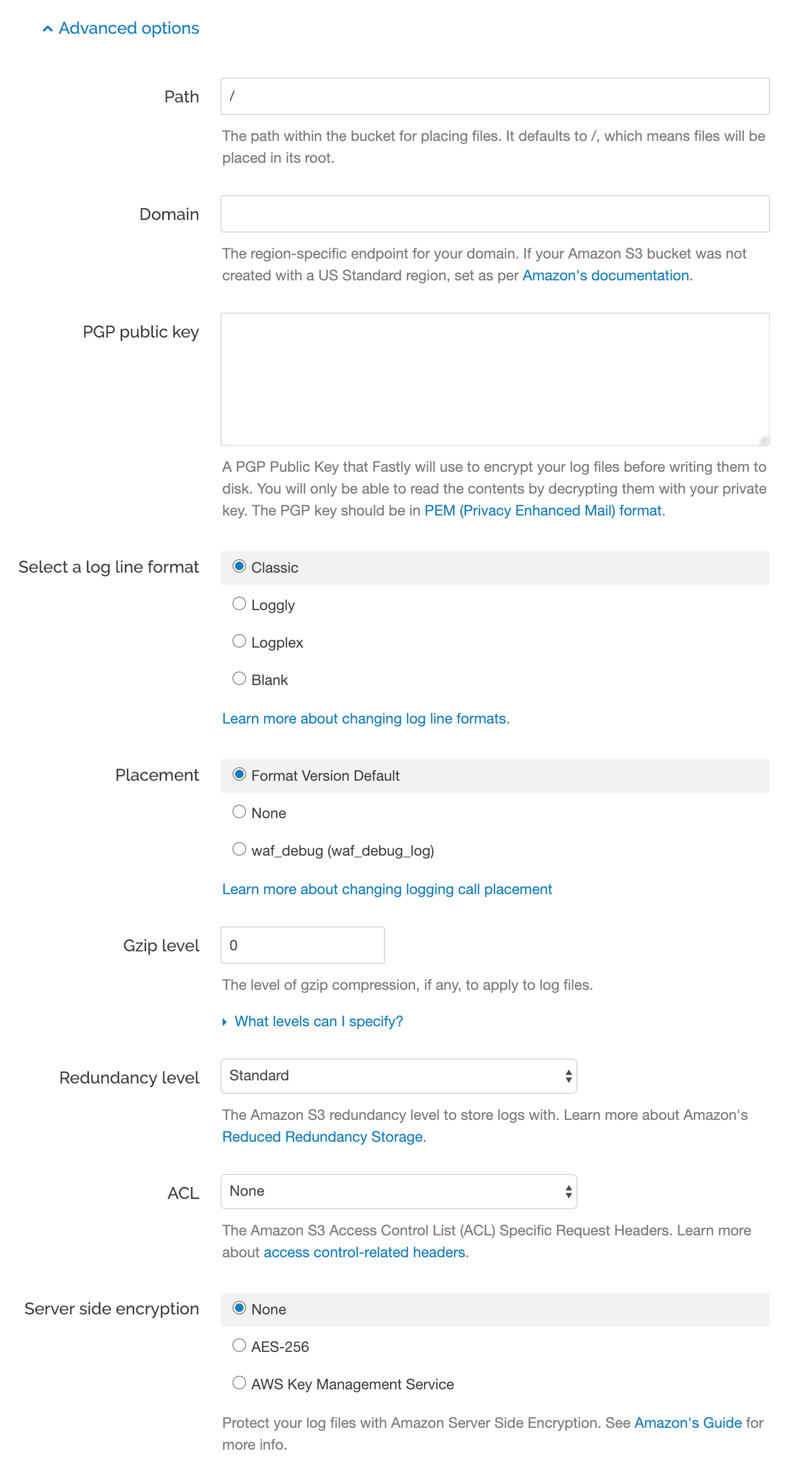

Fastly - Log Streaming

Fastly has a Log Streaming feature that allows you to automatically save logs to a third-party service for storage and analysis. More information is available at:

https://docs.fastly.com/en/guides/log-streaming-amazon-s3

A sample screen for creating a Fastly S3 logging endpoint configuration for a ChaosSearch implementation follows:

Vector

Vector is a tool that can collect, transform, and route your logs and metrics to your cloud storage. More information is available at:

https://vector.dev/docs/reference/configuration/sinks/aws_s3/

A sample Vector configuration file for pushing logs to S3 for storage and a ChaosSearch implementation follows:

[sinks.my_sink_id]

# REQUIRED - General

type = "aws_s3" # must be: "aws_s3"

inputs = ["my-source-id"]

bucket = "my-bucket"

region = "us-east-1"

# OPTIONAL - General

healthcheck = true # default

hostname = "127.0.0.0:5000"

# OPTIONAL - Batching

batch_size = 10490000 # default, bytes

batch_timeout = 300 # default, seconds

# OPTIONAL - Object Names

filename_append_uuid = true # default

filename_extension = "log" # default

filename_time_format = "%s" # default

key_prefix = "date=%F/"

# OPTIONAL - Requests

compression = "gzip" # no default, must be: "gzip" (if supplied)

encoding = "ndjson" # no default, enum: "ndjson" or "text"

gzip = false # default

rate_limit_duration = 1 # default, seconds

rate_limit_num = 5 # default

request_in_flight_limit = 5 # default

request_timeout_secs = 30 # default, seconds

retry_attempts = 5 # default

retry_backoff_secs = 5 # default, seconds

# OPTIONAL - Buffer

[sinks.my_sink_id.buffer]

type = "memory" # default, enum: "memory" or "disk"

when_full = "block" # default, enum: "block" or "drop_newest"

max_size = 104900000 # no default, bytes, relevant when type = "disk"

num_items = 500 # default, events, relevant when type = "memory"Updated 9 months ago